Nathan Jones, Tom Schofield and Sam Skinner

The works contrast human motability and modes of thinking with the probabilistic and programmatic nature of computer processing, whilst also showing how both are hybridised and made to compete within cultures around hi-tech ‘innovation’.

In the works an RNN is used to calculate the statistical probability that one letter will follow the next, given what has gone before, based on what it has learned from a set corpus of texts. Other than the texts it is fed, the RNN has no internal dictionary or grammar, meaning that the model is ecologically less costly to train and run than big-data models, and more transparent: the RNN only ‘knows’ what we give it, so audiences are afforded some insight into how source texts determine what the machines output as writing. Our RNNs are also more error prone: the machines can and do make spelling errors, talking a fascinatingly textured patois from the statistical averaging of its dataset.

We can ourselves learn from watching the machines learn to write like this – arriving at meaning from the level of the letter, rather than the network of semantic relations. The impoverished outputs of the small-data set illustrate the punishing scale of big data on which hi-fidelity language models rely, but they also generate new trajectories, new futures for language to bleed into an age we increasingly share with other forms of intelligence.

The Rereader, 2019

A device for scanning books and playing them back as ‘speed reader’ versions. The book scanner transforms the physical book into a series of algorithmic, semantic and aesthetic text performances. Every book scanned contributes to the training of a neural net, producing new texts.

When a page is scanned, the machine goes into a count-down, parsing the image for words.

The words are then played in order twice. The first time we see the location of those words on the page, and the second time the side screen flashes when it reaches ‘important’ words.

Here the Rereader is presented alongside some of Torque’s previous editions, and users are invited to read our work in its machine-augmented version, while helping train a bot that will create new versions it.

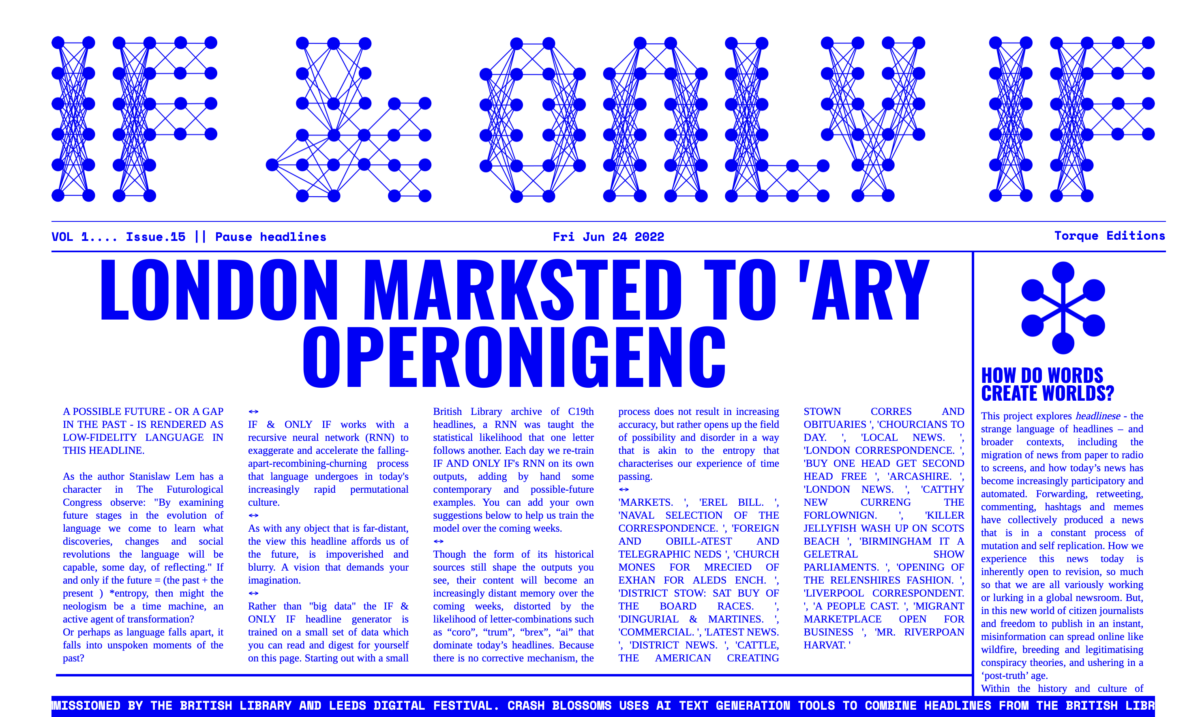

Crash Blossoms / If and Only If, 2021

An artificial intelligence newspaper headline writer trained on a combination of 19th century headlines from The British Library, contemporary news, and user submissions. This work was the subject of a paper, published in DATA browser 10 CURATING SUPERINTELLIGENCES.

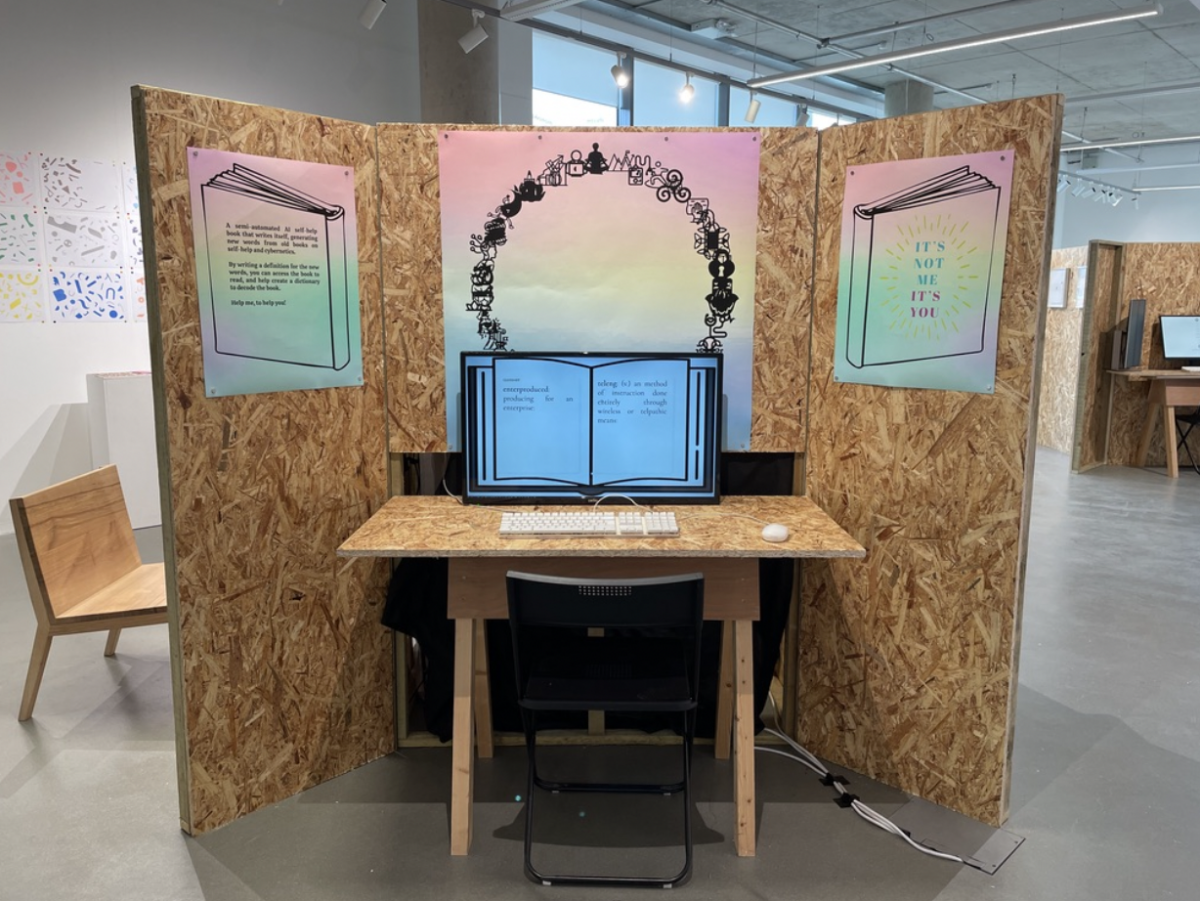

It’s not you, it’s me, 2022

A semi-automatic self-help book writer trained on a corpus of self-help and cybernetics literature, which asks for your help to define the neologisms it stumbles across. Previously shown at Exhibitions Research Lab (Liverpool) and NeMe (Cyprus) in future iterations will be shown alongside an emergent book published page by page, featuring core text, definitions and annotations.